WUDAO: be enlightened by the truth of the universe through the biggest AI model in the world

Dao is a Chinese a philosophical concept signifying the “truth”. WUDAO means, in Chinese, to realize the truth of the universe. At Beijing Academy of Artificial Intelligence (BAAI)‘s annual academic conference today, the institution announced the launch of its latest version 2.0 of WUDAO, a pre-trained AI Deep Learning model that the lab claimed to be “the world’s largest ever”, with a 1.75 trillion parameters, 150 billion more parameters than Google’s Transformers and 10 times of those used to train GPT-3.

Another distinctive feature of WUDAO 2.0, it is a Multimodal model trained to tackle both Natural Language Processing and Computer Vision tasks, two dramatically different types of problems. During the live demostration, the AI Deep Learning model was capable of performing text generation tasks such as writing poems in traditional Chinese styles, writing articles, generating alt text for images; and at the same time, image generation tasks such as generating corresponding images from text descriptions. Collaborating with a Microsoft spun-off company in China, it became the brain to power XiaoBing, a “Virtual Assistant” like Apple’s Siri or Amazon’s Alexa.

The Chinese lab has tested WUDAO’s sub-models on industry standard datasets and claimed that they have achieved better performance than previous models, for example, beating OpenAI’s CLIP on ImageNet Detection SoTA Benchmark (Zero-Shot); beating OpenAI’s CLIP and Google’s ALIGN on image/text indexing of Microsoft COCO datasets; beating OpenAI’s DALL-E for image generation from text with WUDAO 2.0 sub-model Cogview.

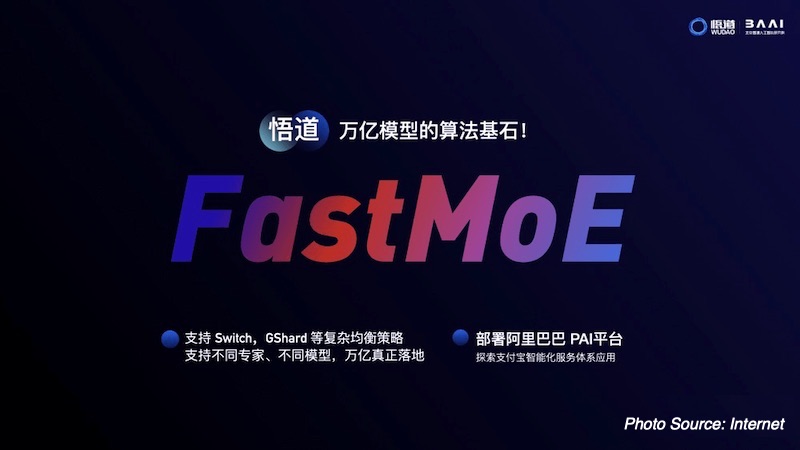

FastMoE: a Made-in-China AI Deep Learning System that enabled the speedy upgrade of WUDAO from 1.0 to 2.0

If we look back, WUDAO 1.0 was only launched in the end of March 2021. What made it progress so quickly in less than 3 months? The answer is FastMoE(https://fastmoe.ai), an easy-to-use and efficient system to support the Mixture of Experts (MoE) model, already open-sourced by the Chinese lab. Supporting both data parallel and model parallel, FastMoE allowed WUDAO to be trained on both supercomputers and regular GPUs with significantly more parameters.

BAAI’s FastMoE, by default, is more accessible and more flexible than Google’s MoE (Mixture of Experts) because Google’s MoE requires its dedicated TPU hardware and its exclusive distributed training framework, while FastMoE works with PyTorch, an industry-standard framework open-sourced by Facebook and can be operated on various combinations of off-the-shelf hardware.

Future of AI: mega data, mega computing power, and mega models

BAAI, founded in 2018, positions itself as the “OpenAI plus DeepMind of China”. Being sufficiently funded and staffed with world-class talents, all three before-cited entities target to solve fundamental problems that hinder the progress towards Artificial General Intelligence and focus on researches that have the potential to enable significant breakthroughs of AI Deep Learning technologies and to empower headline-making, record-smashing new applications previously unimaginable.

BAAI didn’t limited its research in the Chinese contexts. WUDAO 2.0 has been trained on both Chinese and English text/image data. In fact, one of the submodel Generative Language Model (GLM) has adopted a new pre-training paradigm, avoiding the bottleneck of BERT and GPT. GLM 2.0 is also the largest English general pre-training model, using a single model to achieve the best results in text understanding and generation tasks, surpassing also the results of Microsoft’s Turing-NLG, with fewer parameters.

The objective of BAAI is transform data to fuel the AI Deep Learning applications of the future. Therefore, besides having already open-sourced some of the key systems and technologies, WUDAO 2.0 will be served as APIs with the potential of commercialization, similar as the OpenAI GPT-3 model.

TECHENGINES.AI positions itself as the Automation Enabler of Insurance industry. It’s specialized in offering AIoT solutions. Thanks to its deep knowledge of both the Insurance core business and extensive competency with technology, TECHENGINES.AI also supports insurance industry to apply optimizations through AIoT applications.