Very expensive hardware systems (i.e. Money) played a major role in recent advances in Deep Learning AI.

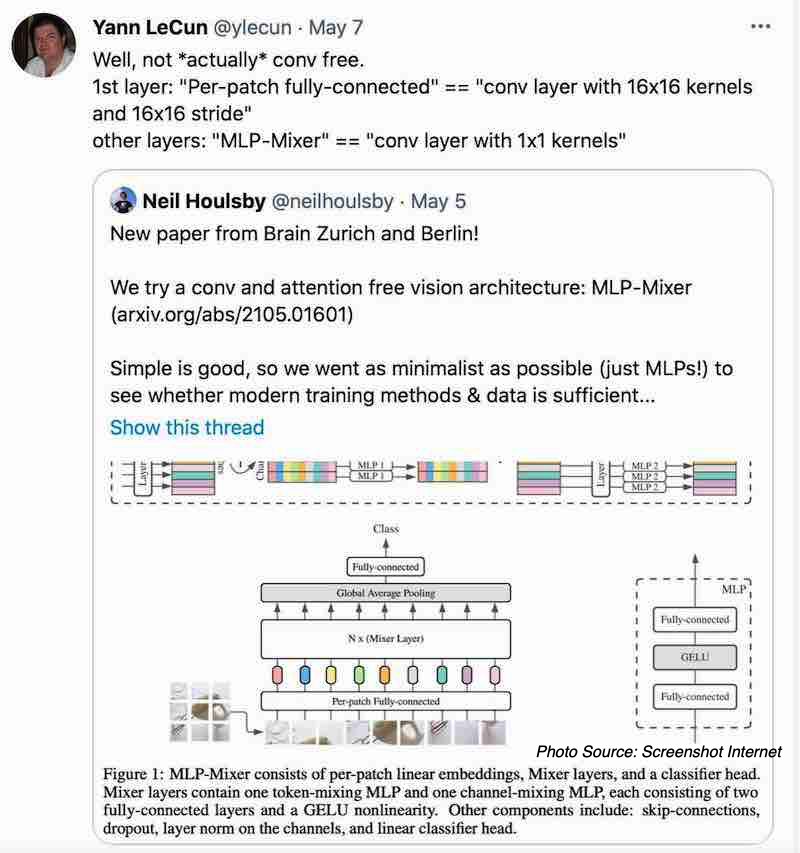

Nowadays, we’re used to the fact that many new papers are published every day on Deep Learning AI, a sector that is experiencing exciting novelties every day and sometimes it’s hard to keep track. On the free e-Print archive website arXiv, only for the subject “Computer Vision and Pattern Recognition”, there were on average 38 papers published each day in May. This time, one paper presented by Google on the 4th May, MLP-Mixer: An all-MLP Architecture for Vision, has made some serious noises, partially due to a very “google-style” title with “all-MLP”.

Against what you might have been thinking, MLPs (multi-layer perceptrons) were a popular machine learning solution in the 1980s. CNNs (Convolutional Neural Networks) were revolutionary for Deep Learning thanks to the introduction of Inductive Bias, an algorithm that tried to maximize the optimization of the resources needed and could take use of the limited data and computing power much more efficiently.

That’s why academic gurus like Yann LeCun didn’t appreciate very much industry tech giants’ advances achieved mainly by piling up the data and GPUs, in another word, money! LeCun retweeted above paper and challenged its claim of being “Convolutional Neural Network” free and successively challenged further the real value of such research.

Deep Learning AI Study Circle: MLP->CNN->Transformer->MLP?

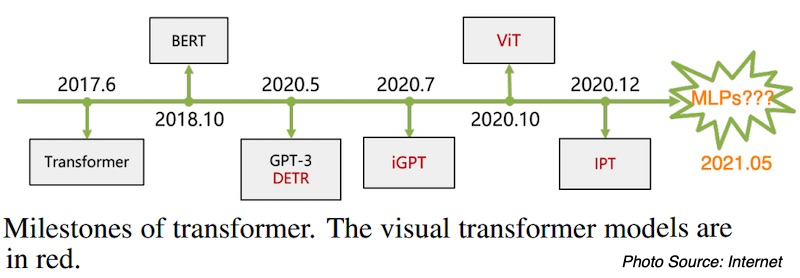

Google as the industry de facto leader of Deep Learning AI, before circling back to MLP, started to explore the power of data and computer power already in 2017. Another “google style” paper Attention Is All You Need was published in June 2017. This paper was published under the subject of “Computation and Language (cs.CL)” targeted to improve NLP (Natural language processing). It introduced a simple network architecture, the Transformer, based solely on attention mechanisms, dispensing with recurrence and convolutions entirely.

Transformer has soon proven to be particularly effective for NLP Deep Learning AI. The overwhelming successes of pretrained models such as BERT, GPT-2/GPT-3 have made Transformer the new standard in NLP-related tasks. Moreover, its application range has further expanded to computer vision tasks as being an alternative architecture against the existing convolutional neural networks (CNN). The same Google published the paper AN IMAGE IS WORTH 16X16 WORDS in Oct 2020 and introduced ViT(Vision Transformer) for Image Recognition tasks. Since there, the directions of advanced NLP and CV research started to converge.

An interesting fact is that the above cited paper of MLP-Mixer under the spotlight was from the same ViT Team in Google. Hence, it inevitably made all the researchers wonder about the future development: Are we really going on round in circle?

Data and Computing Powers (i.e. Money) of tech giants can build solid barriers in AI development.

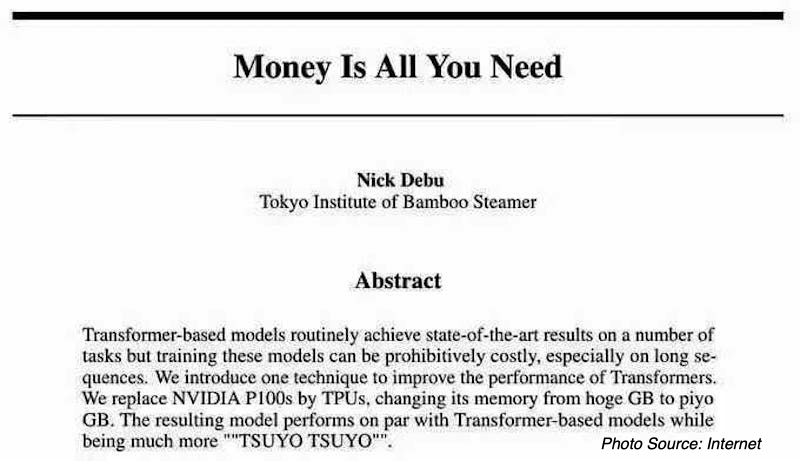

One of the key arguments during the heated discussion is the costs of achieving the results published by the paper. Although the proposed architecture is simple, the results trained with JFT-300M (Google-owned dataset) and unlimited TPU usages are neither affordable nor reproducible for the others.

Similarly, the famed GPT-3 model, which is composed of 96 layers plus 175 billion parameters and was trainded on specialized hardware, such as the supercomputer Microsoft built in collaboration with OpenAI’s talent. It’s estimated that the cost of research and development of GPT-3 was between $11.5 million and $27.6 million, plus the overhead of parallel GPUs.

No wonder a fake paper meme “Money is all you need” quickly went viral in the Deep Learning AI field.

It’s true. Small and medium-sized should leverage tech giants’ findings and focus on specific business optimization.

How can we avoid a future when only the tech giants control new developments in AI is a potential new ethical issue. “Hello, Attention Is Needed Here!”

TECHENGINES.AI positions itself as the Automation Enabler of Insurance industry. It’s specialized in offering AIoT solutions. Thanks to its deep knowledge of both the Insurance core business and extensive competency with technology, TECHENGINES.AI also supports insurance industry to apply optimizations through AIoT applications.