April Fool? Keras: Killed by Google

Keras is almost a household name in the Data Science community. On the 28th March, MIT CSAIL sent a tweet to celebrate Keras’s 6th birthday. However, only a few days later, on the 1st April, a post titled Keras: Killed by Google has sparked heated discussion on Reddit.

Keras is an open-source software library that provides a Python interface for artificial neural networks. Its primary author and maintainer is François Chollet, a Google engineer.

Keras supported already another neural network (NN) backend Theano, years before Google open sourced Tensorflow in Nov 2015. Then since Keras v1.1.0, Tensoreflow became the default NN backend. Keras’s Stable & Consistent & Simple & Framework Agnostic APIs are well appreciated by the Data Science community and helped TensorFlow to grab the throne in the Deep Learning framework world. In 2019, Tensorflow2.0 came up with a tight integration of Keras and an intuitive high-level API tf.keras, that was also the trigger of above Reddit post.

The No.1 position is never easy to maintain. In 2017 Facebook threw its hat in the ring and joined the fight with PyTorch, a more pythonic Deep Learning framework born with dynamic computation graphs. The competition among these two tech giants is so fiercely combative, on the 28th September 2017, Yoshua Bengio announced in an open letter that Theano would stop updating and maintaining.

Since then, the war among Deep Learning frameworks has officially been labeled as the war among Tensorflow/Keras, PyTorch and Others(less than 5%).

Is it true? PyTorch overtook the throne from Tensorflow to become No.1 Deep Learning framework?

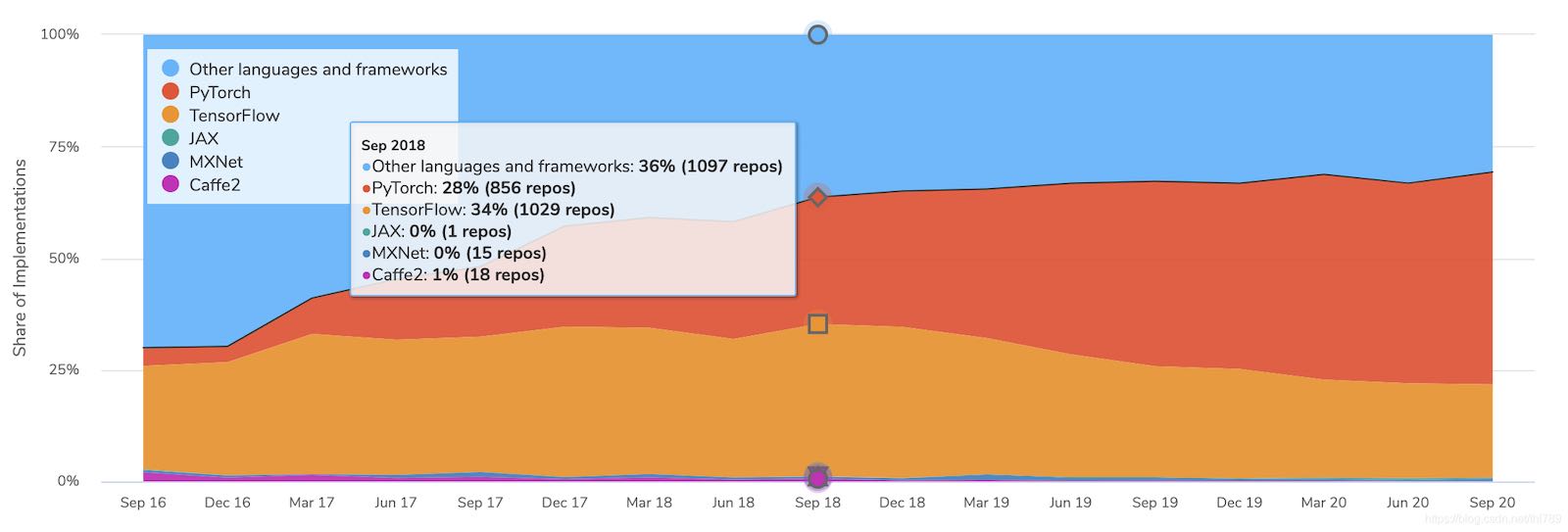

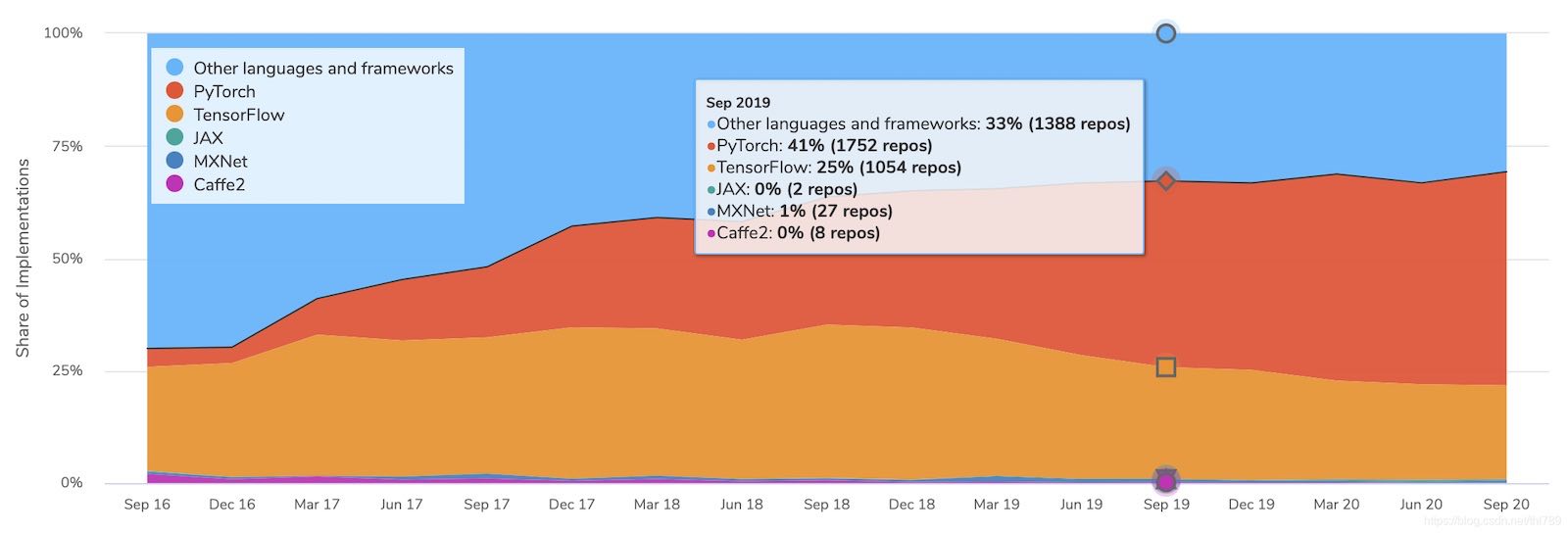

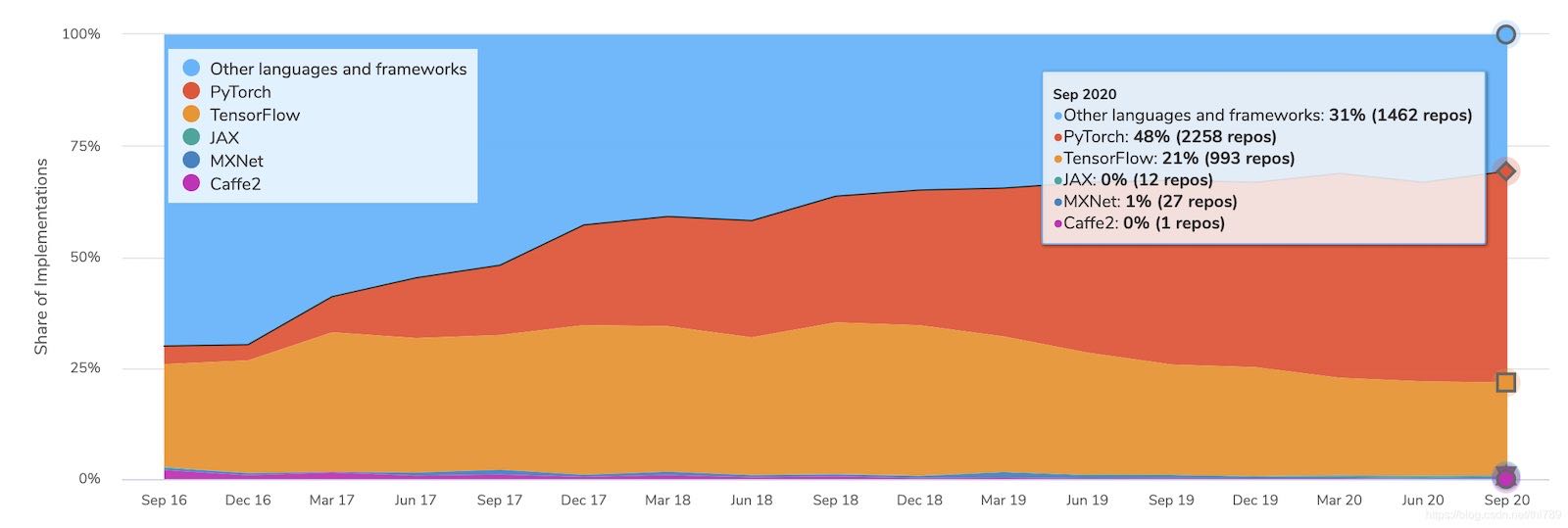

The statistics of GitHub Repos on Deep Learning frameworks used from https://paperswithcode.com/trends are as below:

From above charts, one could draw following conclusions:

- The trend of 2018, 19, and 20 is basically PyTorch squeezing the space of TensorFlow and of other frameworks

- PyTorch has in fact gained clear dominance in the research field.

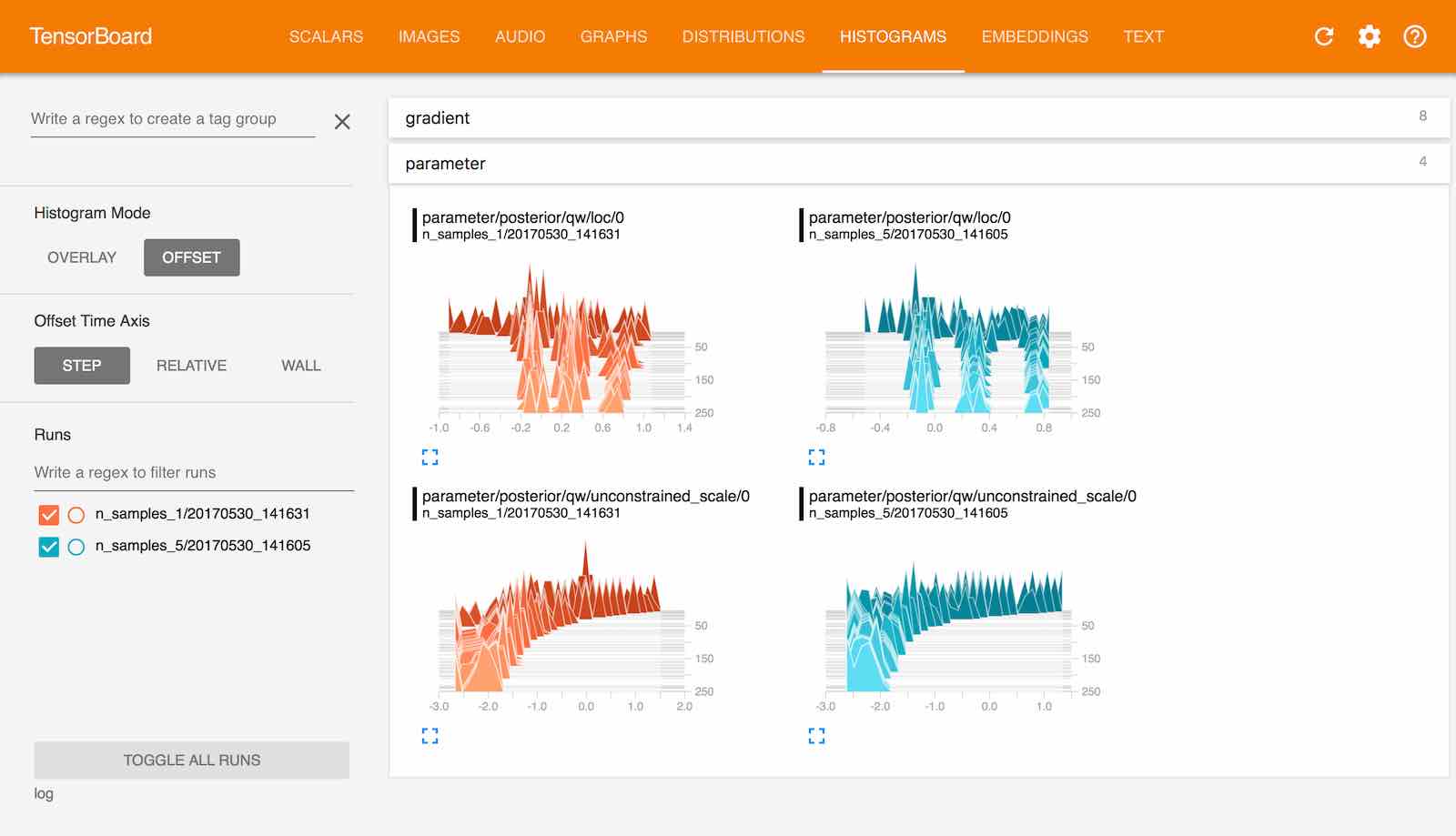

However, it’s yet hard to say what’s happening in the industry. The needs of researchers and industry are different. Researchers care of fast and easy iterations on their research with relatively small datasets. Industry gives much higher priority to the deployment and the performance. Tensorflow has some natural advantages over PyTorch for productions because it was built specifically around the requirements and provided solutions to address mobile and serving considerations. In terms of visualization, TensorBoard of Tensorflow provides much more powerful functionalities than Visdom of PyTorch.

The Deep Learning frameworks continue to evolve driven by the competition.

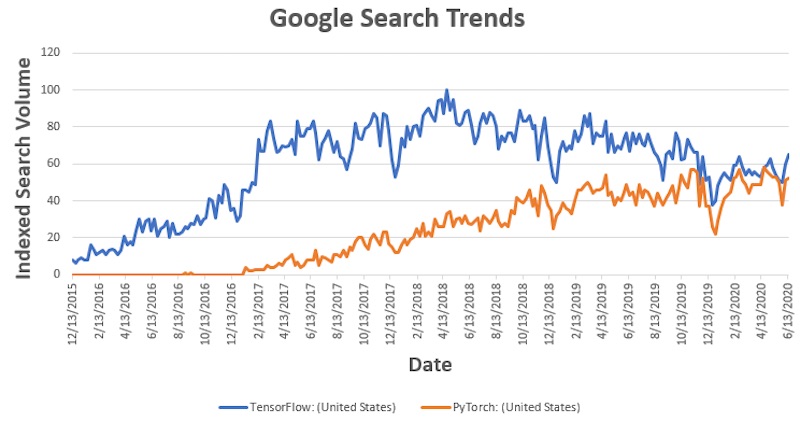

The war between Tensorflow and Pytorch will not end soon, being backed by two tech giants Google and Facebook. The statistics of google search trend by 2020 showed that these two frameworks were still going toe to toe.

Moreover, both of them are learning from the competitions. Tensorflow 2.0 introduced eager execution that evaluates operations immediately, similar to Pytorch’s dynamic computation graphs. Pytorch’s newer versions also allow to convert models to static graphs for better deployment.

At the same time, there are other high-performance machine learning frameworks that are getting more attentions. For example, Google started to promote JAX in 2018. JAX is the immediate successor to the Autograd, it isn’t exactly a Deep Learning framework. JAX created a linear algebra library with automatic differentiation and use XLA to compile and run NumPy programs on GPUs and TPUs. XLA is a domain-specific compiler for linear algebra that can accelerate TensorFlow models with potentially no source code changes, that’s why JAX can be used to train neural network models. Based on JAX, some other research-oriented Deep Learning frameworks like Haiku, Flax, Trax and Elegy have emerged to further enrich the ecosystem.

As TECHENGINES.AI, we thank the contributors of all above frameworks for their efforts to bring always the valuable improvement to the foundation of Deep Learning Community. As users, we are framework agnostic and we analyse the pros and cons of each framework and select the most adequate ones based on the specific needs. We use both Tensorflow/Keras and PyTorch and we keep close track of the newest development of other frameworks. Our mission is to develop AI solutions specialized in Insurance Industry to make insurance simple, convenient and easy to access for the end customers, leveraging the most advanced Deep Learning frameworks.