Systematic review conducted by researchers on 2,212 studies related to the new machine learning based AI models to diagnose or prognosticate for COVID-19

In 2020, Covid-19 ravaged the world. Machine learning and Artificial Intelligence (AI) were considered as promising and potentially powerful techniques for detection and prognosis of the disease. In order to assist doctors in screening potential patients more quickly and accurately, data scientists around the world have published thousands of studies with machine learning based models and claimed that these models can diagnose or prognosticate the coronavirus disease 2019 (COVID-19) from standard-of-care chest radiographs (CXR) and chest computed tomography (CT) images.

However, the analysis Common pitfalls and recommendations for using machine learning to detect and prognosticate for COVID-19 using chest radiographs and CT scans reported in the Nature Machine Intelligence on the 15th March 2021 have revealed that none of them is suitable for detecting or diagnosing COVID-19 from standard medical imaging, due to the data constraints and methodological flaws.

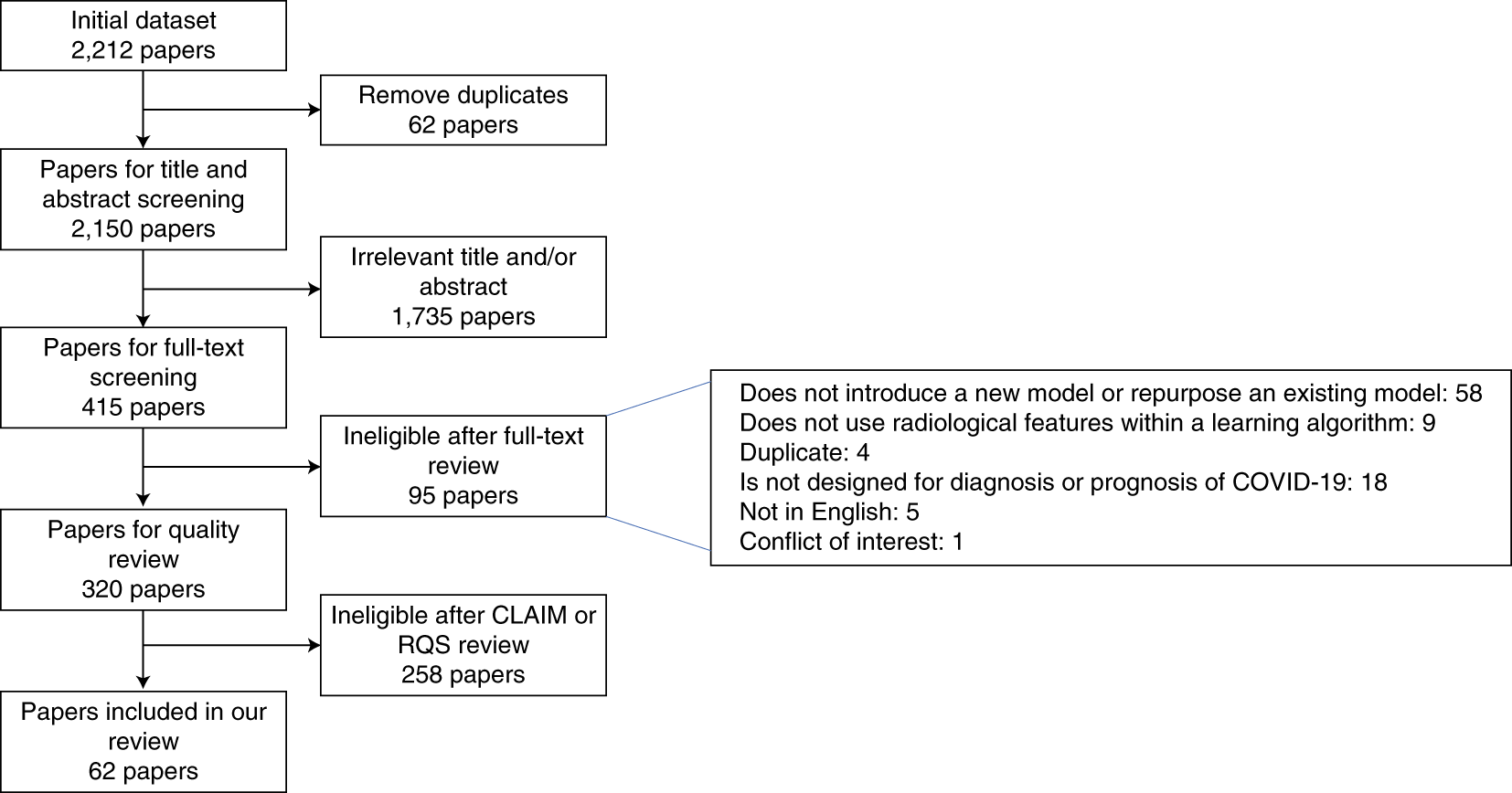

The researchers followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA ) checklist and reviewed 2,212 studies with published papers and preprints, for the period from 1 January 2020 to 3 October 2020. Only 415 studies were included after initial screening, of which 62 were included in the review after quality screening.

PRISMA is an evidence-based minimum set of items for reporting in systematic reviews and meta-analyses. PRISMA focuses on the reporting of reviews evaluating randomized trials, but can also be used as a basis for reporting systematic reviews of other types of research, particularly evaluations of interventions.

Fig.1: PRISMA flowchart for systemtic review

Key challenges of AI technology to deliver clinic impact

AI technology in healthcare continues to accelerate rapidly. The above cited research demostrated the key challenges to transform the results from studies to clinical practices.

- Data quality constraints

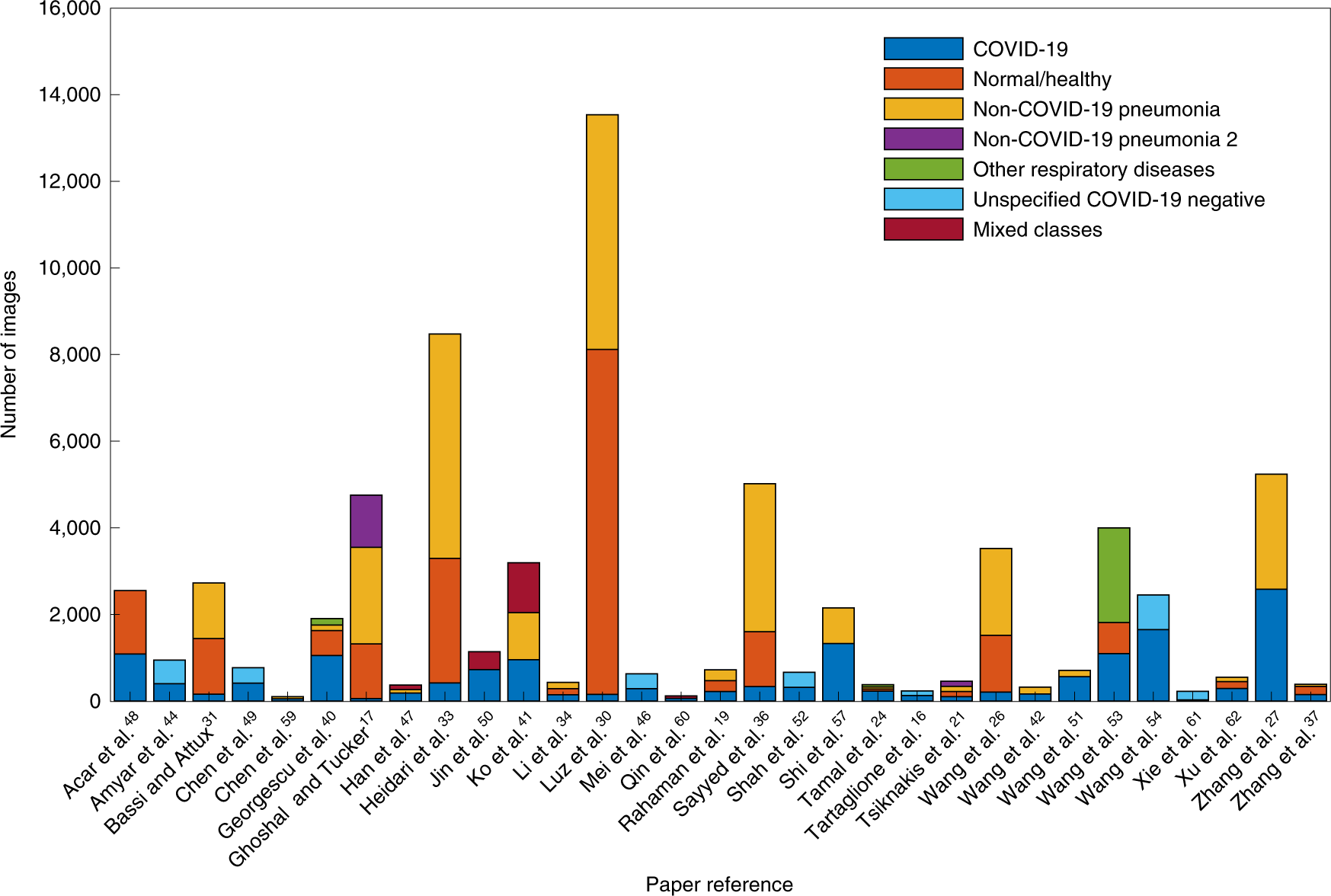

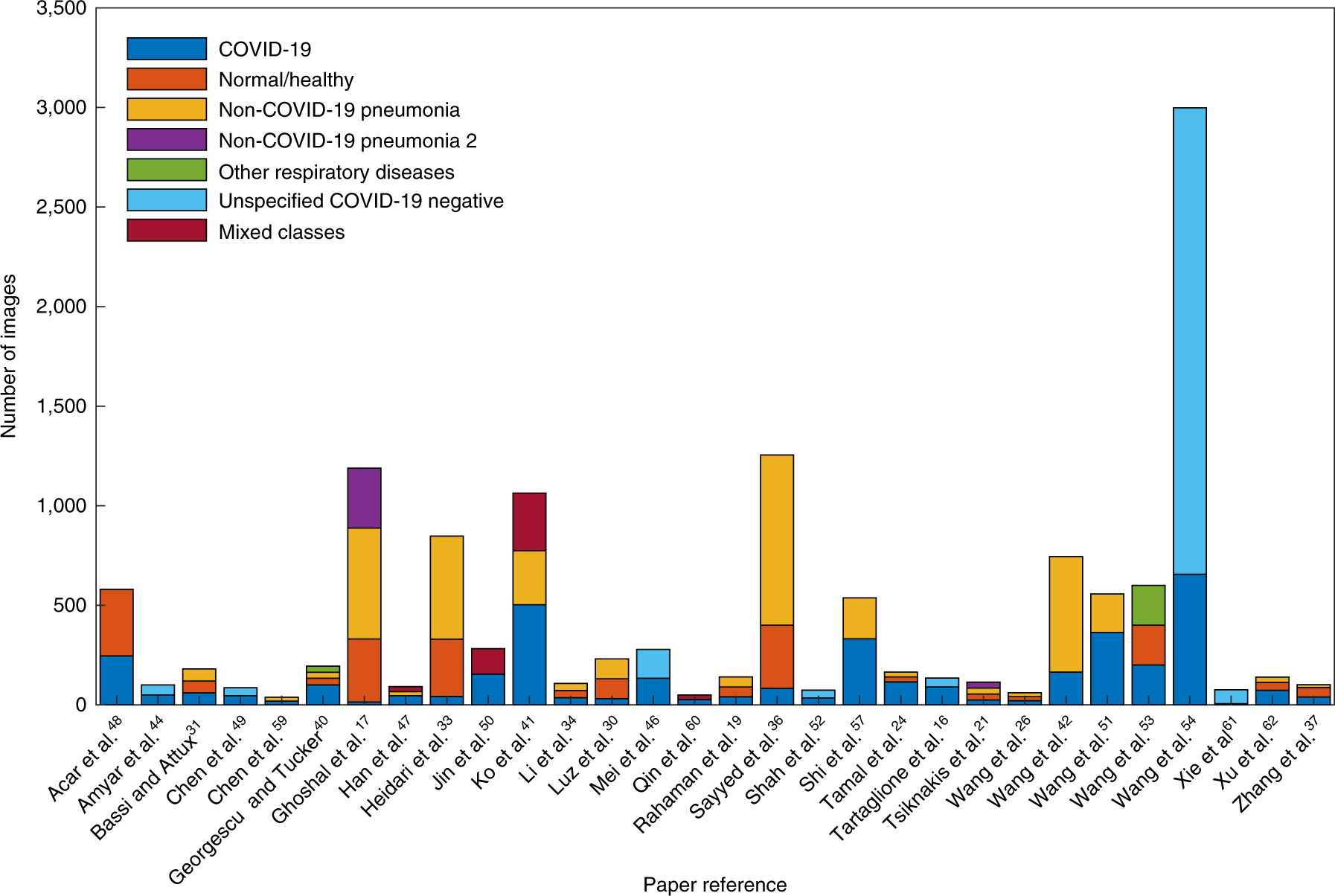

- Overall the datasets are relatively small (see Fig.2 and Fig.3).

- 32 in 62 models used public datasets. The public datasets are naturally bias-prone because it’s not possible to know whether patients are truly COVID-19 positive, or if they have underlying selection biases.

- 33 in 62 models used private datasets, in which 21 used data from mainland China, 3 used data from France and the remainder used data from Iran, the United States, Belgium, Brazil, Hong Kong and the Netherlands. The private datasets with diagnosis due to either positive RT–PCR or antibody tests have a lower risk of bias. However the international variability brought other risks of bias.

- poor integration when data are mixed by combining public and private datasets

- Fig. 2: The number of images used in each paper for model training split by image class.*

- Fig. 3: The number of images used for model testing split by image class.*

- Methodological flaws

- Missing attention to preclude the obvious biased data

- Unclear definition of a control group

- Ground truths being assigned using the images themselves

- Using an unestablished reference to define outcome

- Unclear adaptability of certain technics, e.g. many papers utilized transfer learning in developing their model, which assumes an inherent benefit to performance. However, it is unclear whether transfer learning offers a large performance benefit due to the over-parameterization of the models.

Moreover, there was not enough public availability of the algorithms and models. Only 13/62 papers published the code for reproducing their results (seven including their pre-trained parameters) and one stated that it is available on request.

The high risk of biases and the lack of reproducibility cast serious doubts on the results from the performance evaluations claimed by those models, i.e. the results probably were overly optimistic.

Frankenstein datasets

The ugly and disgusting appearance of “Frankenstein” is undoubtedly a metaphor, implying the evil consequences caused by humans failing to use science and technology correctly.

The researchers introduced the interesting concept “Frankenstein datasets” to refer to the datasets that were assembled from multiple other datasets and were given to new identities. Such datasets increase the complexity of validating data sources and the problems of duplication. For example, when the dataset for training an algorithm is composed of N subsets, it would be likely that the algorithm developer didn’t realize that one of the subsets also contains the components of the other subsets. Such repackaging practice would inevitably lead to algorithms being trained and tested on the same or overlapping datasets, which would lead to overrated performance metrices.

Recommendations of improvement from the research are useful for all algorithm developers not only for the Covid-19

The researchers gave detailed recommendations in five domains: (1) considerations when collating COVID-19 imaging datasets that are to be made public; (2) methodological considerations for algorithm developers; (3) specific issues about reproducibility of the results in the literature; (4) considerations for authors to ensure sufficient documentation of methodologies in manuscripts; and (5) considerations for reviewers performing peer review of manuscripts.

In fact, these are valuable recommendations suitable for all algorithm developers and for all applications of algorithms. At TECHENGINES.AI, we helped our clients in the insurance industry to review and successfully identify the algorithms that were focused only on achieving certain loss indicators without realizing that such algorithms could potentially cause serious errors such as under-reserving, etc.

A series of principles that TECHENGINES.AI follows in its practice are as below:

- The involvement of specialists and business knowledge is fundamental.

- Datasets should be representative for the objective of the models.

- Performance metrices can be deceiving without understanding the details of the algorithms.

- External validations are introduced to improve the robustness of the models.